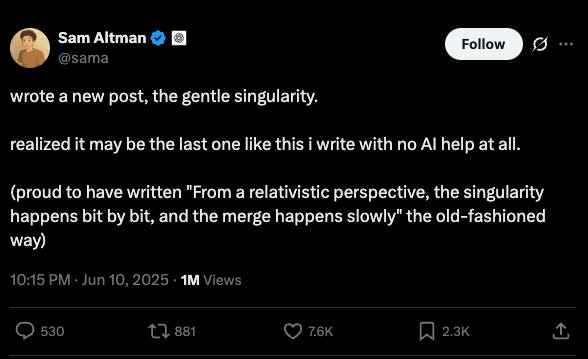

Any time someone says they are proud to have written something, you should clench your mind in anticipation of swill. And lo, Sam Altman — he of giant-plagiarism-machine factory OpenAI — is proud:

What does this even mean? Well, if you are travelling very fast (at “relativistic” speeds) then time slows down for you. Unfortunately for the sentence of which Sam Altman is proud, this means that, from your relativistic perspective, things that are not travelling so fast will seem to happen more quickly. So if we all got into a big dumb rocket and flew around Earth at some large fraction of the speed of light, the AIpocalypse, or Idiot Rapture, would actually seem to happen faster than to a terrestrial observer.

But this isn’t even the most garbagey sentence in the whole piece, which I have read so you don’t have to. It is about how the Singularity — when true artificial intelligence is unleashed upon the world — will be not just brilliant but smooth and comfortable, like a warm bath full of money.

The word “singularity” first meant “singleness of aim or purpose” (OED) — in Richard Rolle’s Psalter (1340), it is explained that holy men have a “syngularite” of heart, since they love only God — and then the quality of being single more generally, or distinctiveness or peculiarity of some kind. It acquired a more technical meaning only at the end of the 19th century, when mathematicians used it to describe a point at which a function takes an infinite value; later, physicists employed it for those solutions of Einstein’s equations that imply points of infinite density: in other words, black holes.

You may think that reading Sam Altman is a bit like being torn apart by the tidal forces of a black hole, but AI theorists use “singularity” in the special made-up way popularized by the science-fiction writer Vernor Vinge. This describes a thing that has not happened, viz., the coming into the world of an artificial superintelligence and a subsequent unimaginable technological flourishing and utter re-engineering of human society, which by analogy is sort of like a black hole’s event horizon, because we cannot see anything beyond it.

Somewhat inconsistently with the etymology, however, people who chatter about the technological singularity in this way claim that they can see beyond it, and what they can see is utopia. As Sam Altman puts it:

We are past the event horizon; the takeoff has started.

If you are past the event horizon of a black hole, it means you can never get out. You certainly can’t take off. But never mind that now. As we fall inexorably towards a point of crushing death, what do we get? According to Sam Altman, a bright future of millions of “humanoid robots” and loads of free money for everyone.

In the 2030s, intelligence and energy—ideas, and the ability to make ideas happen—are going to become wildly abundant.

He doesn’t explain why energy is going to become so abundant but I imagine it’s to do with one of Sam Altman’s giant plagiarism machines suddenly solving nuclear fusion, which definitely sounds plausible. What about the free money?

There will be very hard parts like whole classes of jobs going away, but on the other hand the world will be getting so much richer so quickly that we’ll be able to seriously entertain new policy ideas we never could before.

Like giving everyone free money. Great. What will we spend our free money on?

We’ll all get better stuff. We will build ever-more-wonderful things for each other.

Ah, better stuff. Don’t we all dream of better stuff? If you hate better stuff, you are a Luddite.

Anyway the good news is that none of this is going to happen because large language models like Sam Altman’s giant plagiarism machines will not produce superintelligence, since — as a team of Apple researchers recently showed — they can’t actually reason or follow an algorithm reliably. But isn’t ChatGPT great? Yes, thinks Sam Altman:

In some big sense, ChatGPT is already more powerful than any human who has ever lived.

Is ChatGPT more powerful than Augustus Caesar? Than Joseph Stalin? Interesting if true. But it’s not true. Not in any “big sense”, or indeed in any sense at all.

Of course all the foregoing is needless quibbling because Sam Altman’s blog post should not be read as a serious argument about reality, but as an exercise in advertising and attempted fund-raising for what he now calls his “superintelligence research company”. Just give OpenAI more money and Earthly paradise awaits. In the mean time we can at least give thanks that this is the last time Sam Altman is going to write anything “the old-fashioned way”, ie by using his brain. You shouldn’t use “AI” for writing, unless you are as bad at writing as Sam Altman is, in which case it can hardly come out worse.